Windows 2012 Data Deduplication

Today is a day when Windows Server 2012 was released and it’s available for download. First new feature I wanted to test is Data Deduplication.

Theory

Data Deduplication in Windows Server 2012 is performed as background job, which runs by default every hour. This process runs when server is idle and it doesn’t eat all server’s free resources. One job can run per one volume. It can deduplicate/check 100GB/hour. It does deduplication of variable-sized chunks (32-128KB) of files. Nice picture is on the bottom of this page. By default it deduplicates files which were not accessed for more than 5 days. It doesn’t deduplicate following file types: aac, aif, aiff, asf, asx, au, avi, flac, jpeg, m3u, mid, midi, mov, mp1, mp2, mp3, mp4, mpa, mpe, mpeg, mpeg2, mpeg3, mpg, ogg, qt, qtw, ram, rm, rmi, rmvb, snd, swf, vob, wav, wax, wma, wmv, wvx, accdb, accde, accdr, accdt, docm, docx, dotm, dotx, pptm, potm, potx, ppam, ppsx, pptx, sldx, sldm, thmx, xlsx, xlsm, xltx, xltm, xlsb, xlam, xll, ace, arc, arj, bhx, b2, cab, gz, gzip, hpk, hqx, jar, lha, lzh, lzx, pak, pit, rar, sea, sit, sqz, tgz, uu, uue, z, zip, zoo.

Let’s play

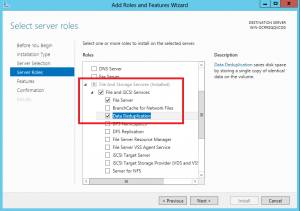

This feature is not enabled by default. You need to enable it by wizard Add Roles and Features:

When this is done you can use all Data Deduplication Powershell Cmdlets. To use these cmdlets you need to run Powershell as Administrator :-).

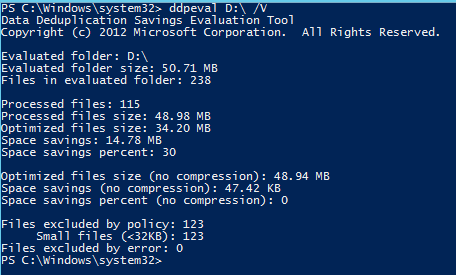

Microsoft engineers gave us one tool DDPEVAL.exe which can do little check data on our drives and tell us expected deduplication savings. You can copy utility DDPEVAL.exe to other system and run it to check deduplication expected ratio before even trying deduplication.

So let’s copy some files on my new disk D:\ (System drives can not be deduplicated) and let’s run DDPEVAL.exe to see expected savings:

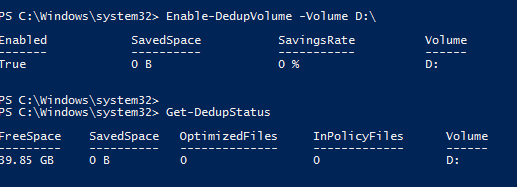

This result was run on couple Excel and Word files. When I run DDPEVAL.exe on my Music directory (all MP3s) I received Space savings 1%. So let’s enable data deduplication on disk D:

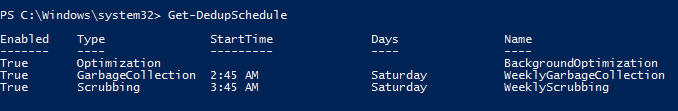

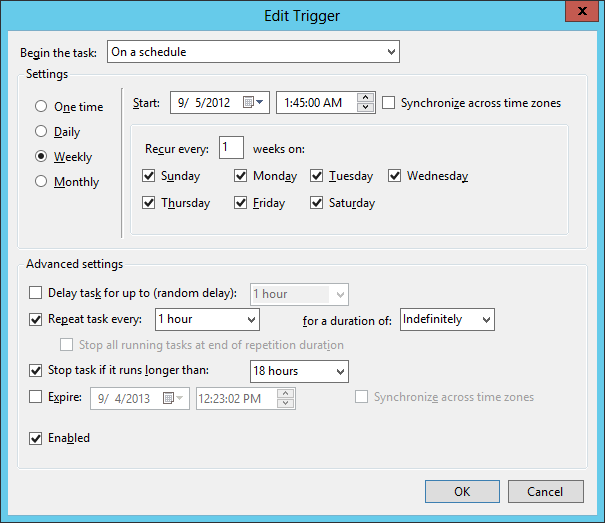

As I mentioned earlier data deduplication job runs every hour. Let’s look at dedupliction scheduled jobs:

Get-DedupSchedule is not really good cmdlet, because it doesn’t provide full information (even when you run | fl *) about correct schedule. When you check Task Scheduler you can see the correct schedule for

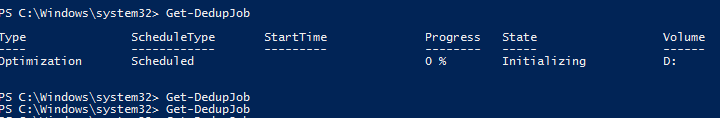

So I will not wait until tomorrow. I will run this task right now and check the result. When I ran it I could see CPU activity for a little bit of time.

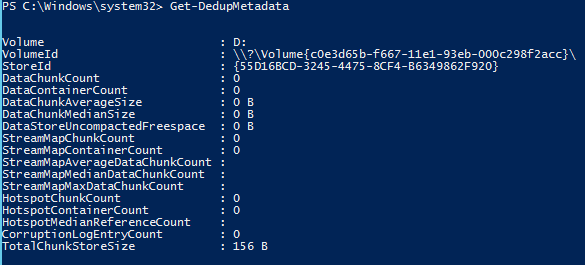

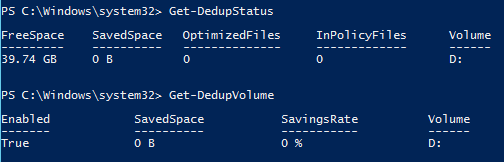

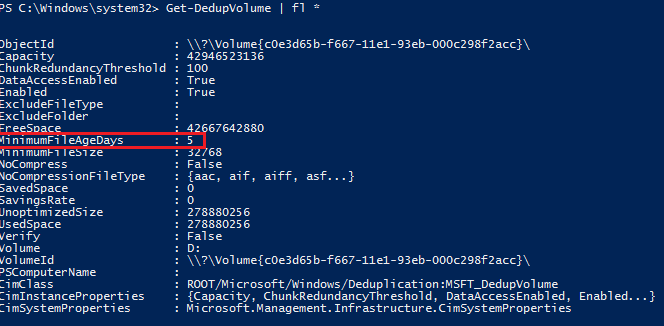

So it looks that nothing was deduplicated. Answer is easy. I just copied data on disk, so their Last Access time is not older than 5 days. And if you remember Data Deduplication by default will not deduplicate files which were not accessed for less than 5 days. So let’s wait for 5 days 🙂 ….. Or better let’s change this 5 days value to something different. Let’s look on values set for volume:

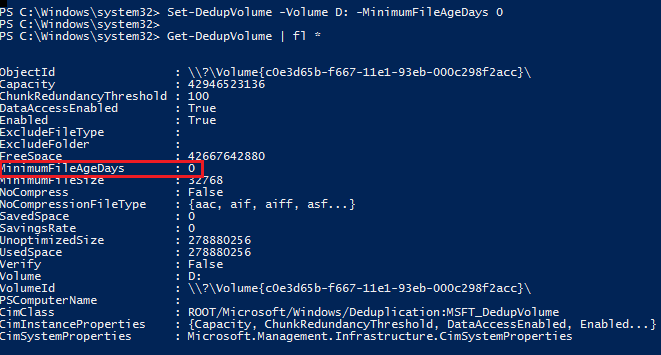

It it’s set per volume setting. Let’s change it via Set-DedupVolume cmdlet:

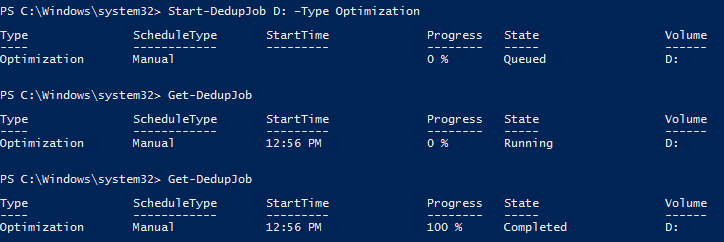

So now I have eliminated deault value 5 days. Let’s run BackgroundOptimization again and look what will be the result.

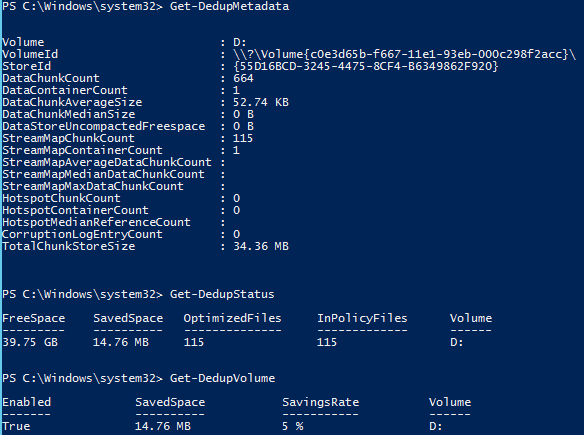

And now when I look at the status of Metadata and Volume I see:

Real SavingRate is 5%, but DDPEVAL.exe counted is aproximately to 30% 🙂

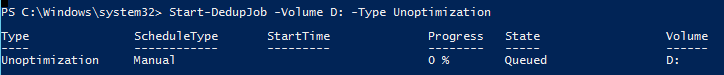

If you want to disable deduplication on volume, you can do it running command

Metadata are not self-cleaning so there have to be two other tasks which cleans Metadata and take care of the data deduplication overhead. Those two tasks are called WeeklyGarbageCollection and WeeklyScrubbing. Their jobs are descibed here.

I think this new feature can be usefull in every customer I know. All of them save lots of data on fileserver which are not accessed for a long time (sometimes years) and they don’t want just delete these files and restore them from tapes when they are needed.

More information can be read from Storage Team blog.

Recent Comments